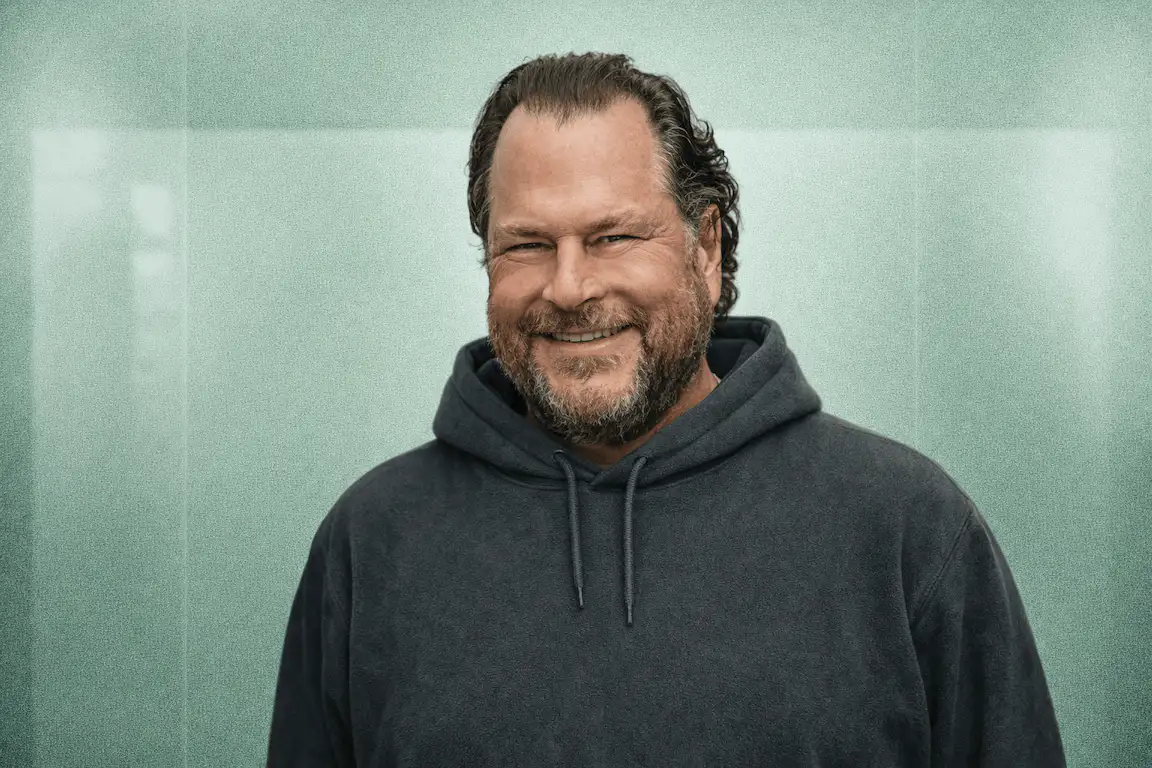

Salesforce is scaling back its reliance on large language models due to reliability issues, according to The Information. Senior VP of Product Marketing Sanjna Parulekar admitted that trust in AI models has declined: "All of us were more confident about large language models a year ago." This comes after CEO Marc Benioff disclosed on The Logan Bartlett Show that Salesforce reduced its support staff from 9,000 to 5,000 employees—approximately 4,000 roles—through AI agent deployment.

The company is now emphasizing that Agentforce can help "eliminate the inherent randomness of large models," marking a significant strategic pivot from the AI-first messaging that dominated enterprise software just months ago.

They're being honest about a fundamental problem. This is rare. Most enterprise AI vendors are still in "AI can do everything" marketing mode. Salesforce admitting that LLMs start omitting instructions when given more than eight directives—as CTO Muralidhar Krishnaprasad noted—is the kind of technical candor enterprise buyers need but rarely get.

Here's why this matters: Salesforce is eating its own dogfood. They deployed Agentforce on their own help site, handling over 1.5 million customer conversations. When Vivint, a customer using Agentforce for 2.5 million customers, found that satisfaction surveys were randomly not being sent despite clear instructions, they worked together to implement "deterministic triggers" to ensure consistent delivery. That's real-world feedback driving product evolution.

The strategic pivot is actually smart engineering. Salesforce has spent the last year building what they call a "hybrid reasoning" approach—combining LLM flexibility for conversational tasks with deterministic logic (Flows, Apex, APIs) for business-critical processes. Their new Agent Graph and Agent Script tools let developers specify exactly which parts of a workflow should be LLM-driven versus rule-based.

This is sophisticated product thinking. They're not abandoning AI—they're building guardrails that make AI actually deployable in enterprises that can't tolerate "the model just forgot to send the survey."

The numbers still work. Despite the reliability concerns, Agentforce just hit $500 million in annual recurring revenue in Q3 2025, up 330% year-over-year. Over 9,500 paid deals have closed. The company is tracking toward $60 billion in organic revenue by FY30.

Benioff claims AI agents have maintained similar CSAT scores as human agents across 1.5 million conversations. If true, that's meaningful validation—even if the path to reliability required more engineering than initially expected.

They're transparent about what agents can't do. Phil Mui, SVP of Agentforce Software Engineering, wrote about AI "drift"—when chatbots lose focus on their primary objectives when users ask irrelevant questions. Acknowledging failure modes publicly builds trust, even if it undercuts the marketing narrative.

The timing creates a credibility problem. Benioff spent 2024 insisting AI wouldn't cause mass layoffs. "I keep looking around, talking to CEOs, asking, What AI are they using for these big layoffs? I think AI augments people, but I don't know if it necessarily replaces them," he told Fortune earlier this year. Then Salesforce cut 4,000 support roles.

Now we're learning the AI they used to justify those cuts has reliability problems significant enough to warrant a strategic pivot. Enterprise buyers paying attention are going to ask: did you cut people based on AI capabilities you're now admitting were oversold?

The stock tells a story. CRM hit an all-time high of $365 on December 4, 2024, riding the Agentforce hype. It's now trading around $260—down roughly 29% from that peak. Investors are clearly uncertain whether Agentforce can deliver on its promise. This admission won't help.

"Deterministic" is a euphemism for "less AI." When Salesforce says they're moving toward "deterministic automation" to "eliminate the inherent randomness of large models," they're essentially saying: we're falling back to traditional workflow automation for the parts that need to actually work. That's not a knock on the strategy—it's the right call—but it's worth understanding what's actually happening. The AI agents are becoming orchestration layers around traditional enterprise software, not replacements for it.

This is the first major enterprise AI vendor to publicly acknowledge the gap between LLM marketing and production reality. Expect others to follow.

We've been seeing this pattern across 700+ enterprise AI transformations: the companies that succeed treat AI as a tool requiring careful orchestration, not a magic replacement for business logic. The companies that struggle are the ones who believed the hype about autonomous agents handling complex workflows without guardrails.

Salesforce's pivot validates what enterprise buyers have been discovering the hard way: LLMs are incredibly powerful for conversational interfaces and creative synthesis, but unreliable for sequential, rule-dependent processes. The winning architecture combines both—which is exactly what Salesforce is now building.

For CIOs evaluating AI agents: ask vendors specifically about their approach to determinism. How do they handle multi-step processes? What happens when the LLM gets confused? Where are the hard handoffs to rule-based logic? Any vendor that doesn't have clear answers is probably still learning the lessons Salesforce just admitted publicly.

Salesforce's LLM reliability admission is simultaneously a warning and a maturation signal. The warning: if you're betting on AI agents to automate complex business processes end-to-end, you're probably overpromising to your board. The maturation: the industry is finally moving from "AI can do everything" to "AI plus traditional automation, carefully orchestrated, actually works."

Enterprise buyers should treat this as a buying opportunity—not for Salesforce stock, but for realistic AI strategy. The vendors who survive the next two years will be the ones building hybrid systems like what Salesforce is describing. The vendors still selling pure LLM magic will struggle to deliver.

My prediction: By Q2 2026, every major enterprise AI vendor will be marketing some version of "deterministic + AI" hybrid architecture. Salesforce just got there first—albeit by stumbling into it.

What's your experience with AI agent reliability in production? Have you seen similar instruction-following problems? Drop a comment or reach out directly.